AI

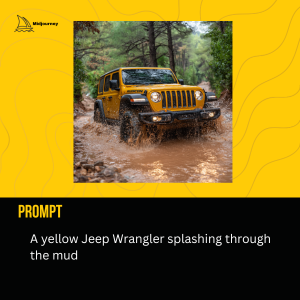

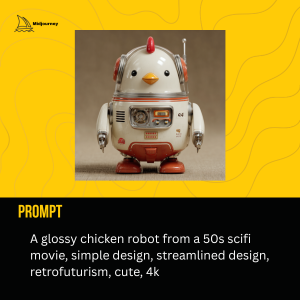

MidJourney: The Pinnacle of AI Image Creation

In the rapidly evolving landscape of artificial intelligence, MidJourney has emerged as a groundbreaking tool, redefining the boundaries of digital art and visual content creation. For designers, artists, and even casual enthusiasts, it offers an unprecedented ability to translate imagination into stunning visuals with remarkable ease. At www.thetechreview.net, we’ve taken a deep dive into MidJourney, and our verdict is clear: it stands as the premier AI tool for image creation today.

What is MidJourney and What Can It Do?

MidJourney is an independent research lab that explores new mediums of thought and expands the imaginative powers of the human species. In practical terms, it’s an AI program that generates images from natural language descriptions, known as “prompts.” Users interact with the MidJourney Bot via Discord, feeding it text prompts, and in return, the AI conjures up a set of four unique images based on those descriptions.

Its core abilities are vast and continually expanding:

- Image Generation from Text: This is the bread and butter of MidJourney. From abstract concepts to photorealistic scenes, the AI can render almost anything you can describe.

- Image-to-Image Generation: Users can provide an initial image along with text prompts to guide the AI, allowing for stylistic transfers, variations, or enhancements of existing visuals.

- Stylistic Exploration: MidJourney excels at understanding and applying various artistic styles, from classical painting and impressionism to cyberpunk and anime, allowing users to experiment with diverse aesthetics.

- High-Resolution Upscaling: Generated images can be upscaled to higher resolutions, making them suitable for various applications, including print and professional design work.

- Variations and Remixing: The tool allows users to generate variations of existing images or combine elements from multiple images and prompts to create entirely new compositions.

- Aspect Ratio Control: Users can specify the desired aspect ratio for their images, crucial for fitting different platforms and design layouts.

Why MidJourney Stands Out: The Best AI Tool for Image Creation

While other AI image generators exist, MidJourney consistently produces results that are not only technically impressive but also possess a distinct artistic quality and aesthetic appeal. Its algorithms seem to have a deeper understanding of composition, lighting, and color theory, leading to outputs that often feel more cohesive, imaginative, and “artistic” than those from competitors. The level of detail, the nuanced textures, and the often ethereal or dreamlike quality of its creations set it apart as the leader in the field.

Enhancing, Not Replacing: MidJourney’s Role for Designers

A common misconception surrounding AI art tools is that they threaten the livelihoods of graphic designers and artists. This couldn’t be further from the truth, especially with a tool as sophisticated as MidJourney. Instead, it acts as a powerful enhancement to a designer’s toolkit.

- Rapid Ideation and Brainstorming: Designers can quickly generate a multitude of visual concepts and ideas in minutes, exploring different styles, compositions, and themes without the time commitment of manual sketching or rendering. This accelerates the initial ideation phase of any project.

- Mood Board Creation: MidJourney is exceptional for creating rich, evocative mood boards, helping clients visualize the aesthetic direction of a project before detailed work even begins.

- Asset Generation (Conceptual): While not producing final, print-ready assets directly, it can generate conceptual elements, backgrounds, or textures that can then be refined, edited, and integrated by a human designer.

- Overcoming Creative Blocks: When inspiration wanes, a few prompts in MidJourney can spark new ideas and push creative boundaries.

- Personalized Stock Imagery: Instead of sifting through generic stock photo libraries, designers can generate highly specific and unique images tailored precisely to their project’s needs.

Ultimately, MidJourney is a co-creative partner. It frees designers from repetitive tasks and allows them to focus on higher-level strategic thinking, client communication, and the intricate refinement that only human creativity and skill can provide. The designer’s eye for detail, understanding of brand guidelines, typography, layout, and overall project goals remain indispensable.

A Deep Dive into MidJourney Versions

MidJourney is constantly evolving, with new versions bringing significant improvements in coherence, understanding, and aesthetic quality.

- V1 to V3: These early versions laid the groundwork, demonstrating the core capability but often producing more abstract or “painterly” results.

- V4: A major leap forward, V4 brought vastly improved coherence, better understanding of complex prompts, and a significant increase in photorealism capabilities. It was a game-changer for many users.

- V5/V5.1: These iterations further refined photorealism, improved hands and anatomy (a common challenge for AI), and offered greater control over details. V5.1, in particular, was known for its strong default aesthetic and ease of use.

- V6 (Current Flagship): The latest major release, V6, represents another monumental step. It boasts an even deeper understanding of natural language, allowing for more precise control over prompt elements. It significantly improves text rendering within images (a previously weak point) and offers unparalleled photorealism and detail. It also introduces new prompting techniques for greater artistic direction.

Each version has its unique characteristics, and users often experiment with different versions (using the --v parameter in prompts) to achieve specific artistic effects.

Who is MidJourney Best For?

MidJourney caters to a wide audience:

- Graphic Designers: For rapid prototyping, mood boarding, conceptual art, and overcoming creative blocks.

- Illustrators and Artists: As a source of inspiration, a tool for generating background elements, or for exploring new artistic styles.

- Marketing Professionals: For creating unique visuals for campaigns, social media content, and advertisements without extensive photoshoots or stock photo licenses.

- Content Creators: Bloggers, YouTubers, and social media influencers can generate custom thumbnails, banners, and visual assets.

- Game Developers: For concept art, environmental design, and character inspiration.

- Architects and Interior Designers: For visualizing concepts and presenting mood-driven designs.

- Hobbyists and Enthusiasts: Anyone with an interest in art and technology can explore their creativity and produce stunning images.

Pricing

MidJourney operates on a subscription model, offering various tiers to suit different usage levels. While specific pricing can fluctuate, generally, the plans are structured around the number of “fast GPU hours” a user gets per month, which dictates how quickly images are generated. There are typically:

- Basic Plan: Suitable for casual users or those just starting.

- Standard Plan: Offers more GPU hours and often includes “relax mode” (slower generation but doesn’t consume fast hours) for more extensive use.

- Pro Plan: Designed for professional users requiring significant usage, offering the most fast hours and additional features like stealth mode (preventing images from being publicly visible on the MidJourney website).

MidJourney also occasionally offers a free trial, allowing new users to generate a limited number of images to experience the tool firsthand.

The New Frontier: Text Prompt to Video Creation

Perhaps the most exciting recent development in the AI visual space, and one that MidJourney is actively exploring (or has recently rolled out in various forms), is the ability to generate video from text prompts. While still in its nascent stages compared to image generation, this capability is revolutionary. Imagine typing a description like “a serene forest with sunlight filtering through the leaves and a gentle breeze rustling the branches,” and receiving a short, animated clip. This opens up entirely new avenues for:

- Motion Graphics: Rapid prototyping of animated sequences.

- Storyboarding: Visualizing dynamic scenes for film or animation.

- Marketing Content: Creating engaging short video ads or social media snippets.

- Immersive Experiences: Building dynamic backgrounds or interactive elements for virtual environments.

This feature, as it matures, promises to be as transformative for video content as image generation has been for static visuals, further cementing MidJourney’s position at the forefront of AI-powered creativity.

Conclusion

MidJourney is more than just a tool; it’s a creative partner, an endless source of inspiration, and a testament to the incredible advancements in artificial intelligence. For anyone involved in visual creation, from seasoned professionals to curious hobbyists, it offers an unparalleled ability to bring ideas to life. It’s not here to replace human creativity but to augment it, pushing the boundaries of what’s possible and empowering us all to visualize our wildest imaginations. If you’re looking to elevate your creative workflow and explore the future of digital art, MidJourney is an indispensable asset.

AI

The AI Music Revolution: How Suno.com is Democratizing Music Creation

The music industry stands at the precipice of its most profound transformation since the advent of digital recording. At the forefront of this revolution is Suno.com, an artificial intelligence-powered platform that’s turning anyone with a creative spark into a potential music producer. This isn’t just another tech novelty—it’s a paradigm shift that’s about to send shockwaves through the entire music artist industry.

Here is a Reggae song I created using Suno

What is Suno?

Suno is an AI music generation platform that transforms text prompts into complete, studio-quality songs in minutes. Unlike simple beat makers or loop libraries, Suno creates original compositions from scratch, complete with melodies, harmonies, instrumentals, and even vocals with lyrics. The technology represents years of machine learning development, trained on vast datasets to understand musical structure, genre conventions, emotional resonance, and the intricate relationship between lyrics and melody.

What sets Suno apart from other AI music tools is its remarkable ability to generate music that doesn’t just sound technically correct—it sounds genuinely professional. We’re not talking about robotic, obviously-synthetic compositions. The songs Suno produces feature nuanced arrangements, dynamic performances, and an emotional depth that would make most listeners do a double-take when told they’re listening to AI-generated music.

How Suno Works: The Technology Behind the Magic

At its core, Suno utilizes advanced neural networks specifically designed for audio generation. The platform employs a sophisticated architecture that understands not just musical theory, but the cultural context and emotional weight of different genres, instruments, and vocal styles.

When you input a prompt into Suno, the AI processes multiple layers of musical information simultaneously. It considers:

Genre and Style: Whether you want pop, rock, jazz, classical, hip-hop, country, or any blend thereof, Suno adjusts its compositional approach to match the stylistic conventions of your chosen genre.

Mood and Emotion: Descriptors like “melancholic,” “uplifting,” “aggressive,” or “dreamy” guide the AI in selecting appropriate chord progressions, tempo, and instrumental textures.

Structure: The AI automatically creates verse-chorus-bridge arrangements that feel natural and engaging, with appropriate transitions and dynamic builds.

Instrumentation: Suno intelligently selects and blends virtual instruments that suit your genre and mood, from acoustic guitars and piano to synthesizers and full orchestral arrangements.

Vocals and Lyrics: Perhaps most impressively, Suno generates vocal performances that capture the right emotional tone, complete with subtle imperfections that make them sound remarkably human.

The generation process typically takes just a minute or two, after which you receive a complete, radio-ready track that you can download and use.

The Quality That’s Turning Heads

The quality of Suno’s output is nothing short of extraordinary. Early AI music tools produced novelty tracks at best—interesting experiments that were clearly machine-generated. Suno has crossed into territory where many of its productions could legitimately compete with human-created music on streaming platforms.

The vocals are particularly impressive. Gone are the days of flat, lifeless AI singing. Suno’s vocal synthesis includes vibrato, breath control, emotional inflection, and even genre-appropriate vocal techniques. A country song might feature twangy delivery and slight Nashville pronunciation, while a soul track comes with powerful, gospel-influenced runs and emotional grit.

The instrumental arrangements show similar sophistication. A rock song doesn’t just feature guitar, bass, and drums playing in time—it includes subtle details like guitar pick scrapes, drum fills at exactly the right moments, and bass lines that lock in with the kick drum in that ineffable way that makes music feel “tight.” Jazz compositions swing with authentic rhythm section interplay. Electronic tracks pulse with carefully programmed synthesizers and meticulously crafted drops.

The mixing and mastering are also remarkably professional. Tracks come with balanced frequencies, appropriate compression, and a polished sound that would typically require hours of engineering work in a traditional studio.

Two Paths to Creation: AI Lyrics or Your Own

One of Suno’s most flexible features is its dual-mode approach to lyrics. You can absolutely let Suno handle everything, providing just a genre and mood description, and the AI will generate both music and lyrics that work together seamlessly. This is perfect for quick ideation or when you’re curious what the AI might create around a particular theme.

However, the real magic happens when you bring your own lyrics to the platform. This is where Suno transforms from a novelty into a genuine creative tool. You maintain complete creative control over the message, poetry, and narrative of your song while leveraging AI for the musical execution.

This is precisely how I use the platform, and I’ve found that combining different AI tools creates an even more powerful workflow. I use Google’s Gemini to write my song lyrics. Gemini excels at understanding creative direction, generating poetic language, maintaining consistent themes, and structuring verses and choruses that tell compelling stories. I can have a conversation with Gemini about the emotional arc I want, specific imagery I’m trying to evoke, or even technical requirements like syllable count and rhyme schemes.

Once I have lyrics I’m happy with, I simply paste them into Suno along with genre and style specifications. The AI then composes music specifically tailored to those lyrics—matching the syllable patterns, emphasizing key words with melodic peaks, and adjusting the mood and energy to complement the lyrical content.

This hybrid approach combines the best of both worlds: the nuanced language and storytelling capabilities of advanced language models with the musical expertise of specialized audio AI. You’re essentially collaborating with multiple AI systems, each playing to its strengths, while you remain the creative director orchestrating the final product.

The Storm Coming for the Music Industry

Make no mistake—technology like Suno is about to fundamentally reshape the music artist industry as we know it. The implications are both exciting and, for some, deeply concerning.

Democratization of Production: For generations, creating professional-quality music required significant financial investment—studio time, equipment, producers, session musicians, and mixing engineers. These barriers kept many talented songwriters and creative minds out of the industry. Suno obliterates these obstacles. A teenager with a laptop and a Suno subscription can now produce music that rivals major label releases. This democratization will unleash a tsunami of new music and voices that were previously excluded from the industry.

Speed and Volume: Traditional music production is time-intensive. Writing, arranging, recording, and producing a single song can take weeks or months. With Suno, that same process takes minutes. Artists can now iterate rapidly, testing different arrangements, genres, and approaches without significant time or financial investment. They can release music at a pace that was previously impossible, potentially flooding streaming platforms with unprecedented volumes of content.

The Economics of Music Creation: When production costs approach zero, the entire economic model of the music industry shifts. Record labels have traditionally justified their existence—and their large share of revenues—by bearing the financial risk of production. If that production cost disappears, what value do they provide? Artists may increasingly go independent, keeping more of their earnings and maintaining creative control.

The Definition of Artistry: Perhaps the most contentious question is philosophical: Is music created with AI tools “real” music? Does it count as artistic expression? This debate echoes historical controversies when synthesizers, drum machines, and auto-tune were introduced. In each case, the initial backlash eventually gave way to acceptance and integration. AI music tools will likely follow this same trajectory, becoming another instrument in the modern musician’s toolkit rather than a replacement for human creativity.

Job Displacement: The harsh reality is that some roles in music production will become less necessary. Session musicians, certain types of producers, and audio engineers may find their services in less demand as AI can replicate much of what they do. However, new opportunities will also emerge—AI music directors, AI-human collaboration specialists, and roles we haven’t even imagined yet.

The Human Element Remains Essential

Despite AI’s capabilities, human creativity remains irreplaceable. Suno doesn’t replace songwriters—it empowers them. The platform still requires human guidance: someone needs to conceive the song’s concept, craft the lyrics (if not using AI-generated ones), and make creative decisions about genre, mood, and style. The emotional truth, the personal experience, the unique perspective—these come from humans.

Moreover, the curation and refinement process remains human-driven. While Suno might generate a great first draft, artists still need to decide which of multiple generations works best, which sections to keep, and how the song fits into a larger body of work or album concept.

Looking Ahead

As Suno and similar platforms continue to evolve, we can expect even more impressive capabilities. Future versions might allow for more granular control over arrangements, enable real-time collaboration between multiple users and AI, or even generate music that adapts to listener feedback in real-time.

The music industry is indeed about to be taken by storm, but rather than viewing this as a threat, we might better understand it as an evolution. Just as digital audio workstations didn’t destroy music—they enabled more people to create it—AI music generation will expand the boundaries of what’s possible while creating new opportunities for human creativity to flourish.

Suno.com represents more than just impressive technology. It’s a glimpse into a future where the barriers between musical inspiration and realization have all but disappeared, where anyone with something to express can craft professional music to convey it. The storm is coming, and it’s bringing democratization, disruption, and an explosion of creativity that will reshape music for generations to come.

Visit our AI Reviews section for more great info.

Apps

What is Vibe Coding? A New Era in Software Development

Vibe Coding

Coined by AI researcher Andrej Karpathy, vibe coding is an emerging software development practice that uses artificial intelligence (AI) to generate functional code from natural language prompts. Instead of meticulously writing code line-by-line, a developer’s primary role shifts to guiding an AI assistant to generate, refine, and debug an application through a conversational process. The core idea is to focus on the desired outcome and let the AI handle the rote tasks of writing the code itself.

Who is it for?

Vibe coding is not just for professional developers. It’s designed to make app building more accessible to those with limited programming experience, enabling even non-coders to create functional software. For professional developers, it acts as a powerful collaborator or “pair programmer,” accelerating development by automating boilerplate and routine coding tasks. This allows experienced developers to focus on higher-level system design, architecture, and code quality.

What can you do with it?

The applications of vibe coding are vast, especially for rapid ideation and prototyping. It’s well-suited for:

- Prototyping and MVPs (Minimum Viable Products): Quickly create a functional prototype to test an idea without spending a lot of time on manual coding.

- Side Projects: Build small, personal tools or applications for specific needs.

- Data Scripts and Automation: Automate repetitive tasks or create small data processing scripts.

- UI/UX Mockups: Generate a visually functional user interface based on a description.

- Learning and Experimentation: Use AI tools to learn new programming languages or frameworks by asking them to explain the code they generate.

How to learn how to do it?

Learning vibe coding is less about mastering syntax and more about mastering communication with AI. Here are some key steps and best practices:

- Start with the right tools. Popular choices include GitHub Copilot, ChatGPT, Google Gemini, and platforms like Replit and Cursor.

- Be specific and break down complex tasks. The “garbage in, garbage out” principle applies. Instead of asking the AI to “build a social media app,” start with a specific, manageable task, like “create a Python function that reads a CSV file.”

- Iterate and refine. The first output may not be perfect. The process is a continuous loop of describing, generating, testing, and refining the code. You guide the AI with feedback like, “That works, but add error handling for when the file is not found.”

- Always review and verify. Do not blindly trust the AI’s output. Review the code it generates to ensure accuracy, security, and quality. A developer’s ability to read and debug code becomes an even more critical skill.

Is there a future job for it

Vibe coding is not seen as a replacement for human developers, but rather as an amplifier. The future of jobs in this space is likely to involve a shift in roles:

- AI-First Developer: A developer who builds products primarily using modern AI-powered tools.

- Prompt Engineer: A specialist who designs clear and effective prompts to get the best possible output from AI systems.

- Oversight Lead: A professional who validates, debugs, and secures AI-generated codebases.

Vibe coding will likely continue to evolve and create new opportunities for those who adapt and learn to work effectively with AI tools.

Q&A

Q: Can a non-coder truly build a full application with vibe coding?

A: While vibe coding can enable a non-coder to create a functional prototype or a simple app, complex, production-level applications with robust features and security still require the expertise of a professional developer to review, fix, and maintain the codebase.

Q: Does vibe coding make learning traditional programming languages obsolete?

A: No. While vibe coding automates much of the manual coding, a fundamental understanding of programming concepts, data structures, and algorithms is still essential for guiding the AI, debugging, and ensuring the quality and security of the final product.

Q: What are the main downsides of vibe coding?

A: Vibe coding can lead to technical complexity, a lack of architectural structure, and code quality issues. Debugging can be challenging, as the code is dynamically generated. There are also potential security risks if the generated code is not properly vetted.

Want to know if that new app is worth the download? Our in-depth reviews have you covered. We’ve tested the latest software so you can make smarter decisions.

Explore our app and software reviews now to upgrade your digital life!

AI

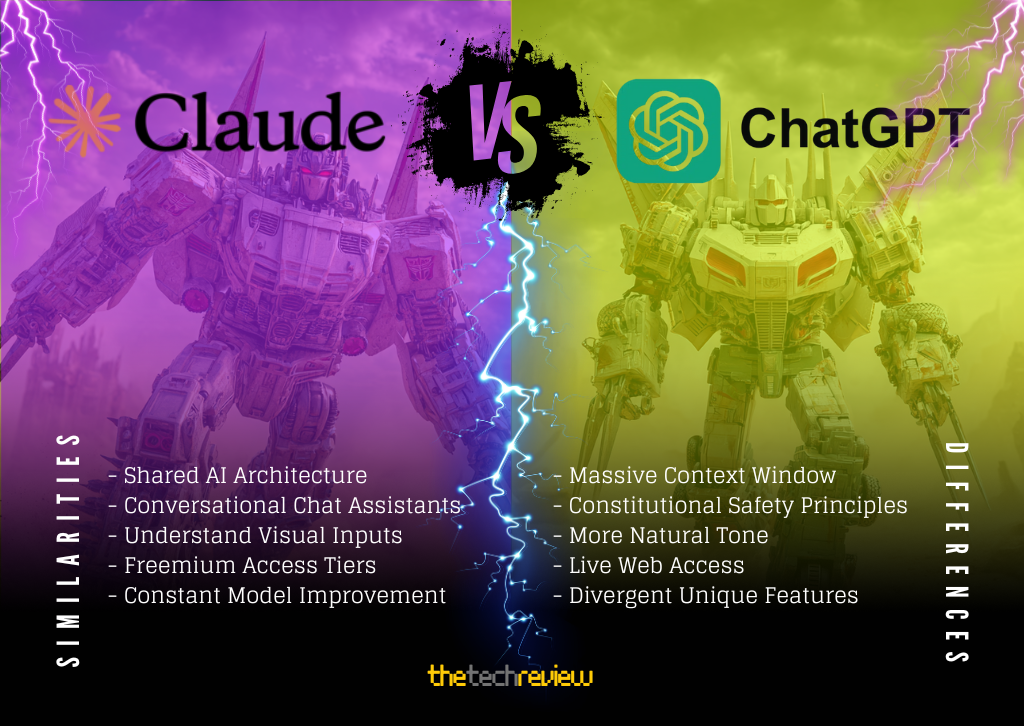

Claude vs. ChatGPT: Key Advantages of Anthropic’s AI

Claude vs. ChatGPT

While both Claude, developed by Anthropic, and ChatGPT, from OpenAI, are powerful large language models at the forefront of artificial intelligence, Claude possesses several distinct advantages that make it a compelling choice for specific users and applications. These benefits primarily revolve around its massive context window, constitutional AI principles, and a more natural-feeling conversational ability.

One of Claude’s most significant differentiators is its substantially larger context window. The latest version, Claude 3, boasts a context window of up to 200,000 tokens, equivalent to approximately 150,000 words or over 500 pages of text. This dwarfs the context window of even the most advanced versions of ChatGPT. This extensive memory allows Claude to process and analyze vast amounts of information in a single prompt, making it exceptionally well-suited for tasks such as summarizing lengthy reports, analyzing complex legal documents, or maintaining coherence in long, intricate conversations. For users who need an AI to grasp the nuances of extensive textual data, Claude’s superior context handling is a clear advantage.

Anthropic’s commitment to developing a “constitutional AI” also sets Claude apart. This approach involves training the AI on a set of core principles designed to ensure its outputs are helpful, harmless, and honest. This emphasis on ethical and safe AI behavior can result in more reliable and less prone to generating problematic or biased content. While OpenAI also invests heavily in safety measures, Anthropic’s foundational focus on a constitutional framework is a core tenet of Claude’s design and a key benefit for users concerned with the ethical implications of AI.

In terms of conversational fluency, many users report that Claude’s responses feel more natural and less “robotic” than those of ChatGPT. It often excels at creative writing tasks, generating more nuanced and human-sounding prose. This can be attributed to its training and architectural choices, which prioritize a more thoughtful and context-aware conversational style.

Furthermore, for developers and those working with code, Claude offers a feature called “Artifacts.” This allows users to see, interact with, and build upon the code generated by the AI in a dedicated window, creating a more integrated and efficient workflow. While ChatGPT is also a capable coding assistant, Claude’s Artifacts provide a more seamless and user-friendly experience for iterative development.

Finally, in some head-to-head comparisons and benchmarks, different versions of Claude have demonstrated superior performance in specific areas, such as certain reasoning tasks and standardized exams. While the performance of both models is constantly evolving with new updates, Claude has proven to be a formidable competitor and, in some instances, a more capable tool for particular intellectual challenges.

In conclusion, while ChatGPT remains a versatile and widely used AI assistant, Claude offers compelling advantages in its massive context window, its foundational commitment to ethical AI principles, its natural conversational style, and its developer-friendly features. For users who prioritize these specific capabilities, Anthropic’s Claude presents a powerful and increasingly popular alternative.

Similarities

- Core Technology: Both are advanced Large Language Models (LLMs) based on the transformer architecture, designed for natural language understanding and generation.

- Primary Function: They are both general-purpose conversational AI assistants capable of a wide range of tasks, including answering questions, writing essays, summarizing text, generating code, and creative writing.

- Multimodality: Both models have versions that are multimodal, meaning they can understand and process not just text but also visual inputs like images and documents.

- Accessibility: Both offer a free tier for general use and more powerful, feature-rich subscription models (Claude Pro, ChatGPT Plus/Team/Enterprise) for advanced users.

- Continuous Development: Both are under constant development by their respective companies (Anthropic and OpenAI), with frequent updates that improve their capabilities, accuracy, and safety.

Differences

- Context Window: This is Claude’s most significant advantage. The Claude 3 models offer a 200,000 token context window, allowing them to process and recall information from extremely long documents (approx. 150,000 words), while ChatGPT’s context window is considerably smaller.

- AI Safety Philosophy: Claude is built on a “Constitutional AI” framework, where it’s trained to align its responses with a core set of principles (a “constitution”). ChatGPT primarily uses Reinforcement Learning from Human Feedback (RLHF), relying more on human reviewers to guide its behavior.

- Conversational Tone: Many users find Claude’s conversational style to be more natural, reflective, and less overtly “AI-like.” ChatGPT’s tone can sometimes be more direct and structured, though this can be modified with custom instructions.

- Real-Time Web Access: ChatGPT, particularly in its paid versions, can browse the live internet to provide up-to-the-minute information and cite current sources. Claude’s knowledge is generally limited to its training data, which has a specific cutoff date.

- Ecosystem and Features:

- ChatGPT has a more mature ecosystem with features like Custom GPTs (allowing users to create specialized versions of the chatbot) and a vast library of third-party plugins.

- Claude offers unique features like Artifacts, which provides a dedicated workspace to view, edit, and iterate on generated content like code snippets or documents directly within the interface.

- Performance on Specific Tasks: While both are highly capable, they exhibit different strengths. At its launch, Claude 3 Opus surpassed GPT-4 on several industry benchmarks for reasoning and knowledge. Anecdotally, users often prefer Claude for long-form creative writing and summarizing massive texts, while ChatGPT’s broader ecosystem and web access make it a powerful tool for research and specialized tasks.

Visit our AI Reviews section for more great info.

-

Photography6 months ago

Photography6 months agoSony FE 16mm f/1.8 G Review: The Ultra-Wide Prime for the Modern Creator

-

Home Tech7 months ago

Home Tech7 months agoThe Guardian of Your Threshold: An In-Depth Review of the Google Nest Doorbell

-

Tablets9 months ago

Tablets9 months agoClash of the Titans: 13″ iPad Pro M4 vs. Samsung Galaxy Tab S10 Ultra – Which Premium Tablet Reigns Supreme?

-

Computers7 months ago

Computers7 months agoAsus ProArt Display 6K PA32QCV Review: A Visual Feast for Professionals

-

Computers7 months ago

Computers7 months agoASUS Zenbook Duo: A Pretty Awesome Dual-Screen Laptop

-

Photography7 months ago

Photography7 months agoAdobe’s “Project Indigo” is the iPhone Camera App We’ve Been Waiting For, and It’s Awesome

-

Health Tech7 months ago

Health Tech7 months agoLumen Metabolism Tracker: A Deep Dive into Your Metabolic Health

-

Home Tech7 months ago

Home Tech7 months agoRevolution R180 Connect Plus Smart Toaster: More Than Just Toast?

-

Photography6 months ago

Photography6 months agoDJI Osmo 360 go: The Next Generation of Immersive Storytelling?

-

Computers7 months ago

Computers7 months agoApple Mac Studio Review: A Desktop Powerhouse Redefined

-

Computers7 months ago

Computers7 months agoSamsung 15.6” Galaxy Book5 360 Copilot AI Laptop: A Deep Dive into the Future of Productivity

-

Wearables6 months ago

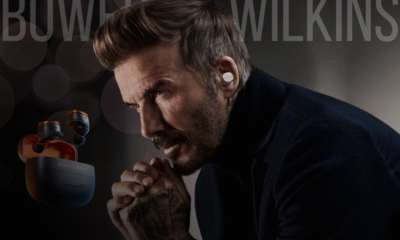

Wearables6 months agoBowers & Wilkins Pi8 McLaren Edition Review: A Supercar for Your Ears?